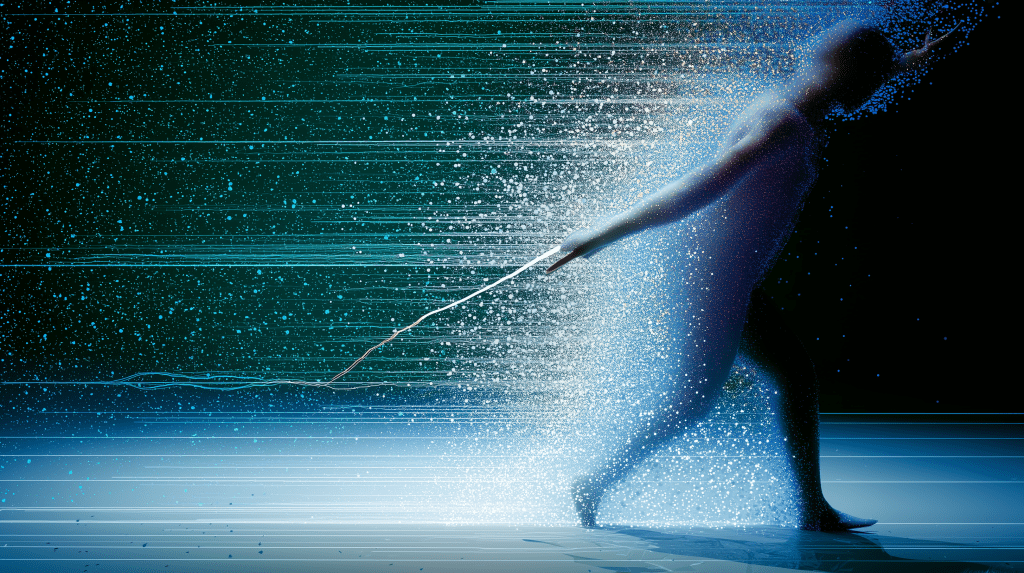

The actor stepped into the motion capture suit one last time, unaware that they were about to become the puppet master of a character that existed only in the quantum realm of artificial intelligence.

The Dawn of a New Era

Sarah Chen had been working in motion capture for fifteen years when everything changed. The veteran performance capture artist had brought life to countless digital characters—from fantasy creatures to photorealistic humans—but nothing had prepared her for the morning she walked into the studio to find herself face-to-face with an AI-generated character that seemed to breathe with its own consciousness.

“Meet Aria,” the director announced, gesturing toward a monitor displaying a hyper-realistic character whose features shifted subtly with each render. “She was born from a neural network, but she needs your soul.”

This moment encapsulates the current revolution in filmmaking: the seamless integration of motion capture technology with AI-generated characters. It’s a fusion that’s redefining not just how we create digital beings, but how we think about performance, authenticity, and the very nature of character creation in cinema.

The Technical Symphony Behind the Magic

The integration of motion capture with AI-generated characters represents a convergence of multiple cutting-edge technologies working in perfect harmony. At its core, this process involves three critical components that must synchronize with millisecond precision.

The Capture Layer serves as the foundation, where traditional motion capture systems record human performance with unprecedented fidelity. Modern systems can track hundreds of markers simultaneously, capturing not just gross motor movements but the subtle micro-expressions that convey emotion, the slight weight shifts that suggest internal conflict, and the barely perceptible hesitations that make characters feel genuinely human.

The AI Generation Engine operates as the creative interpreter, using machine learning algorithms trained on vast datasets of human movement, facial expressions, and behavioral patterns. These systems don’t merely copy human motion—they understand it, analyze it, and can even predict how a character might move in situations never directly captured.

The Integration Bridge represents the most complex element: the real-time translation system that converts captured human performance into AI character animation. This involves sophisticated algorithms that account for the physical differences between human performers and AI-generated characters, ensuring that a human actor’s movements translate naturally to a character who might have different proportions, bone structure, or even species characteristics.

The Workflow Revolution

The traditional motion capture pipeline has been completely reimagined to accommodate AI-generated characters. Where filmmakers once relied on pre-designed character models, they now work with AI systems that can generate characters on-demand, each with unique physical characteristics and movement profiles.

The process begins with character generation, where AI systems create digital beings based on creative briefs, story requirements, or even random generation parameters. These characters arrive complete with their own movement signatures—the way they naturally hold their shoulders, their default gait, their unconscious mannerisms.

During the capture phase, performers work with AI systems that provide real-time feedback about how their movements translate to the AI character. Imagine an actor seeing their performance instantly converted to a character with completely different physical attributes, with the AI system adjusting for differences in limb length, joint flexibility, and even species-specific movement patterns.

Post-production has evolved into a collaborative process between human editors and AI systems. The captured performance data becomes the foundation for AI algorithms that can fill in gaps, smooth transitions, and even generate additional performances based on the captured style. An actor might perform a single walk cycle, and the AI can generate dozens of variations that maintain the character’s essential personality while adapting to different emotional states or environmental conditions.

Creative Possibilities Unleashed

The fusion of motion capture and AI-generated characters has opened creative doors that were previously locked tight. Directors can now envision characters without the constraints of human physiology, knowing that AI systems can translate human performance to virtually any form imaginable.

Morphological Freedom represents perhaps the most exciting possibility. Characters can shift between different forms while maintaining consistent personality and movement signatures. A character might begin as humanoid but transform into an abstract geometric form, with the AI system ensuring that the essence of the performance carries through the transformation.

Emotional Amplification allows AI systems to enhance captured performances in ways that would be impossible with traditional animation. Subtle emotional cues can be amplified or diminished, microexpressions can be exaggerated for dramatic effect, and the AI can even generate entirely new emotional states by interpolating between different captured performances.

Temporal Consistency ensures that AI-generated characters maintain believable behavior across entire productions. The AI learns from every captured performance, building a comprehensive understanding of how the character moves, reacts, and exists in the world. This creates a level of consistency that would be nearly impossible to achieve through traditional animation methods.

The Human Element in an AI World

Despite the technological sophistication, the human performer remains irreplaceably central to the process. AI-generated characters might emerge from algorithms, but they achieve life through human performance. The technology doesn’t replace human creativity—it amplifies it.

Motion capture artists are discovering that working with AI-generated characters requires a different approach to performance. They must learn to work with characters whose physical capabilities might far exceed human limitations, or who might move according to entirely different physical rules. This has led to a new discipline of “AI character performance,” where actors learn to inhabit digital beings that exist beyond the boundaries of human experience.

The collaboration between human performers and AI systems creates a unique form of artistic partnership. The AI provides the character and the technical capability, while the human provides the soul, the intention, and the emotional truth that transforms generated imagery into believable performance.

Technical Challenges and Breakthrough Solutions

The integration process faces several significant technical hurdles that developers are continuously working to overcome. Latency issues remain a primary concern, as any delay between human performance and AI character response can break the immersive experience for both performer and audience.

Uncanny valley phenomena present unique challenges when AI-generated characters achieve near-human realism. The motion capture integration must account for the subtle imperfections that make movement feel natural, while the AI system must avoid the mathematical perfection that can make characters feel artificial.

Style consistency requires sophisticated algorithms that can maintain a character’s unique movement signature across different types of performances. An AI character’s walk cycle must feel consistent with their fighting style, their dance moves, and their emotional breakdowns.

Recent breakthroughs in neural network architectures have enabled real-time processing of motion capture data through AI character generation systems. Machine learning models can now predict and generate character animations with latency measured in milliseconds rather than seconds, making real-time performance capture with AI characters a practical reality.

Industry Impact and Market Evolution

The motion capture industry is experiencing a fundamental transformation as AI-generated characters become mainstream. Traditional character design workflows are being reimagined, with AI systems capable of generating dozens of character variations in the time it previously took to create a single character.

Production Timelines are accelerating dramatically. Where character development once required months of design, modeling, and rigging, AI systems can generate production-ready characters in hours or even minutes. This acceleration allows for unprecedented creative experimentation and iteration.

Cost Structures are shifting as the technology democratizes high-end character creation. Independent filmmakers can now access character generation capabilities that were previously available only to major studios with massive budgets.

Creative Roles are evolving as the industry adapts to new workflows. Character designers are becoming AI operators, motion capture artists are learning to work with non-human characters, and directors are discovering new ways to visualize and communicate their creative vision.

The Future Landscape

The trajectory of motion capture integration with AI-generated characters points toward even more revolutionary developments. Predictive performance systems are emerging that can anticipate an actor’s next movement and pre-generate appropriate character responses, creating seamless real-time interaction between human performers and AI characters.

Emotional AI systems are being developed that can analyze the emotional content of captured performances and generate appropriate responses in AI characters. This technology promises to create more believable interactions between human and AI characters, with the AI characters responding not just to physical movements but to the emotional intentions behind them.

Hybrid consciousness represents the cutting edge of development, where AI characters begin to exhibit emergent behaviors that go beyond their programming. These characters might develop their own movement preferences, emotional responses, and personality quirks that emerge from the interaction between captured human performance and AI generation systems.

Ethical Considerations and Creative Responsibility

The power to create AI-generated characters raises important questions about representation, authenticity, and creative responsibility. As these characters become more sophisticated and lifelike, creators must grapple with questions about whose likeness is being used, how cultural characteristics are represented, and what responsibilities come with creating digital beings that might be indistinguishable from real people.

The motion capture integration process must be designed with ethical considerations at its core, ensuring that the technology serves to enhance human creativity rather than replace it, and that the characters generated represent the diversity and complexity of human experience.

Conclusion: The Soul in the Machine

Standing in that motion capture studio, Sarah Chen realized she wasn’t just bringing life to another digital character—she was participating in the birth of a new form of artistic expression. The AI-generated character Aria wasn’t just a collection of pixels and algorithms; she was a canvas for human creativity, a bridge between the possible and the impossible.

The integration of motion capture technology with AI-generated characters represents more than a technical achievement. It’s a testament to human creativity’s ability to find new forms of expression, to push beyond the boundaries of what’s possible, and to create art that speaks to the fundamental human experience of consciousness, emotion, and connection.

As this technology continues to evolve, we’re not just witnessing the future of filmmaking—we’re participating in the creation of new forms of life, digital beings that exist at the intersection of human performance and artificial intelligence. The motion capture suit becomes a bridge between worlds, allowing human souls to inhabit digital bodies and bring genuine emotion to characters born from pure imagination.

The revolution is just beginning, and every performance capture session, every AI-generated character, and every seamless integration brings us closer to a future where the line between human and digital performance disappears entirely. In this future, the only limit to character creation is the breadth of human imagination and the depth of human emotion.

Sarah stepped out of the motion capture suit, but Aria continued to breathe on the monitor, a digital soul animated by human performance and sustained by artificial intelligence—a perfect fusion of technology and humanity that would redefine storytelling for generations to come.