1. Mise-en-Scène

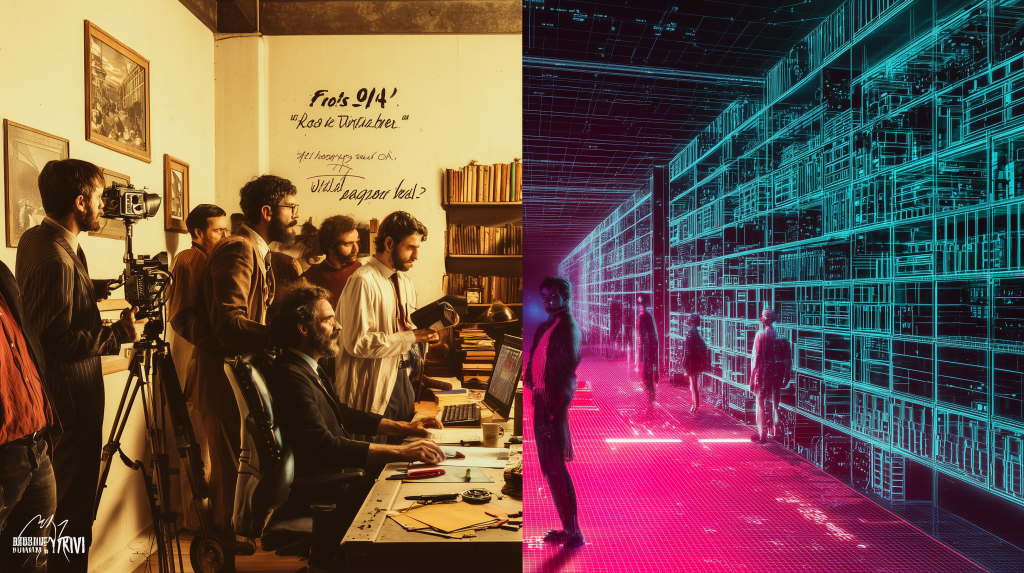

- Classic Film Theory: A French term meaning “placing on stage,” this concept encompasses everything the audience sees within the frame. It is the director’s art of arranging all visual elements—including set design, props, lighting, costumes, and actor positioning (blocking)—to create a specific mood and convey thematic ideas.1

- AI Filmmaking Age Translation: Procedural World-Building & Asset Consistency

- Explanation: Instead of physically building sets, the AI filmmaker uses text prompts and procedural generation to create entire worlds from scratch.4 The primary challenge shifts from physical logistics to prompt craftsmanship. The new art lies in ensuring “asset consistency”—making sure that a generated character, prop, or location maintains a coherent look, style, and history across multiple, independently generated scenes.5 AI tools can generate not just landscapes and cities, but also the cultures, backstories, and languages that give these worlds depth.6

- Suggested Titles:

- Generative World-Building: From Prompt to Planet

- Maintaining Aesthetic Coherence in Procedurally Generated Environments

- Prompting Mise-en-Scène: The Art of Digital Set Dressing

2. Auteur Theory

- Classic Film Theory: This theory posits that the director is the primary “author” of a film. A true auteur’s work is marked by a distinctive personal vision, with recognizable stylistic signatures and recurring themes that are evident across their entire filmography.7

- AI Filmmaking Age Translation: Stylistic Model Training & Prompt Signature

- Explanation: The AI-era auteur is a creator who develops a unique “prompt signature”—a specific style of writing and structuring prompts that yields a recognizable aesthetic. More advanced auteurs may train custom AI models on their own body of work or a curated dataset of influences to create a generative tool that inherently understands their vision.11 Authorship becomes a process of ideation, curation, and filtering, where the artist’s skill is in guiding and refining the AI’s output to align with their personal style.13

- Suggested Titles:

- The AI Auteur: Defining a Voice Through Prompt Engineering

- Custom Model Training for a Signature Cinematic Style

- Generative Synesthesia: Blending Human Ideation with AI Execution 13

3. Three-Point Lighting

- Classic Film Theory: The standard professional method for illuminating a subject using three distinct light sources: a key light (main light), a fill light (to soften shadows), and a backlight (to separate the subject from the background). This technique shapes the subject and creates a sense of three-dimensionality.14

- AI Filmmaking Age Translation: Algorithmic Illumination & Post-Production Relighting

- Explanation: Physical lamps and gels are replaced by descriptive prompts that define the lighting environment. The filmmaker specifies the quality, direction, and mood of the light (e.g., “soft morning light filtering through a window,” “harsh, flickering neon from a street sign”).17 More advanced AI systems can perform “relighting” in post-production by analyzing a generated 2D video, extracting its material properties (like texture and reflectivity), and then realistically applying entirely new lighting schemes after the fact.18

- Suggested Titles:

- Prompting Light: The Language of AI Cinematography

- Virtual Production: AI-Powered Relighting and Compositing

- From Chiaroscuro to High-Key: Controlling Mood with Algorithmic Illumination

4. Depth of Field (Deep & Shallow Focus)

- Classic Film Theory: The control of focus within the frame. Deep focus uses a large depth of field to keep the foreground, middle ground, and background simultaneously sharp. Shallow focus uses a narrow depth of field to isolate a subject by blurring their surroundings.19

- AI Filmmaking Age Translation: Generative Depth Mapping & Simulated Bokeh

- Explanation: Depth of field is no longer solely a physical property of a camera’s lens and aperture. AI models can analyze a generated 2D image and create a “depth map,” which estimates the relative distance of every object in the scene.23 Using this data, the filmmaker can define specific planes of focus, simulate the aesthetic blur of the background (bokeh), and even create complex effects like a rack focus, all through software commands rather than physical lens adjustments.24

- Suggested Titles:

- AI Depth Maps: Creating 3D Space from 2D Prompts

- Simulating Lens Properties: Aperture and Bokeh in Generative Video

- Controlling Viewer Focus with Algorithmic Depth of Field

5. Dolly Zoom (Vertigo Effect)

- Classic Film Theory: A disorienting in-camera effect achieved by physically moving the camera toward or away from a subject while simultaneously zooming the lens in the opposite direction. This keeps the subject the same size while the background appears to dramatically compress or expand.25

- AI Filmmaking Age Translation: Virtual Camera Kinematics & Perspective Warping

- Explanation: The complex and coordinated physical movement of a dolly zoom is replicated through a single text prompt describing the virtual camera’s motion.28 The AI interprets a command like “dolly zoom on the character’s face to show their sudden realization” and generates the signature perspective distortion. The craft shifts from the mechanical precision of a camera crew to the linguistic precision of the prompt to achieve the desired psychological effect.27

- Suggested Titles:

- Simulating In-Camera Effects: The AI Dolly Zoom

- Prompting Virtual Camera Movements for Psychological Effect

- Beyond the Dolly: Advanced Kinematics in Generative Cinematography

6. Cross-Cutting (Parallel Editing)

- Classic Film Theory: An editing technique of alternating between two or more scenes that are happening in different locations, often at the same time. This is used to build suspense, create dramatic irony, or draw thematic comparisons between the different lines of action.29

- AI Filmmaking Age Translation: Multi-Threaded Narrative Generation & Consistency Locking

- Explanation: Cross-cutting in an AI workflow involves generating multiple narrative threads from a series of parallel prompts. The editor’s role becomes curating these generated clips and sequencing them for maximum impact. The key technical hurdle is “consistency locking”—ensuring that a character’s appearance, location, and the time of day remain coherent within their respective narrative thread, even as the AI generates each shot independently.22 AI editing assistants can then analyze the generated footage to suggest effective cuts by identifying key moments or matching actions across the different threads.33

- Suggested Titles:

- Generative Parallel Narratives: The Art of the AI Cross-Cut

- Maintaining Character and World Consistency Across Multiple Prompts

- AI-Assisted Pacing: Automating Suspense with Smart Cuts

7. Diegetic vs. Non-Diegetic Sound

- Classic Film Theory: The distinction between sounds that originate from within the world of the film (diegetic), such as dialogue or a car engine, and sounds added for the audience’s benefit that the characters cannot hear (non-diegetic), such as the musical score or a narrator’s voiceover.34

- AI Filmmaking Age Translation: Prompt-Based Soundscape Generation

- Explanation: In the AI age, both diegetic and non-diegetic sounds are generated from text prompts. The creator describes the desired audio with language. A diegetic prompt might be, “the sound of rain hitting a window pane in a quiet room,” while a non-diegetic prompt could be, “an epic, swelling orchestral score to heighten the emotion”.36 AI tools can generate everything from specific sound effects to complete musical compositions, which can then be layered and mixed, making the filmmaker a conductor of an algorithmic orchestra.38

- Suggested Titles:

- Text-to-Sound: Crafting Diegetic and Non-Diegetic Audio with AI

- Generative Scoring: Creating Emotional Impact with AI Music

- The Algorithmic Soundscape: Immersive Audio in AI Film